ETL architecture is a process for extracting, transforming, and loading data from a variety of sources into a central data warehouse. The data warehouse can then be used for reporting and analytics.

ETL architecture typically follows a three-stage process:

1. Extract: data is extracted from various sources including operational databases, text files, and social media data sources.

2. Transform: the data is transformed into a consistent format that can be loaded into the data warehouse. This may involve cleansing the data, de-duplicating it, and converting it into a structure that is suitable for analysis.

3. Load: the data is loaded into the data warehouse.

ETL architecture refers to the process of Extract, Transform and Load data from a source system to a destination system. This process is used to load data into databases, data warehouses and data marts.

What is ETL architecture explain?

The ETL process is a common way to collect data from various sources, transform it according to business rules, and load it into a destination data store. This process can be used to collect data from databases, flat files, and other sources. The data can be transformed using various methods, such as filtering, aggregation, and sorting. The data can then be loaded into a destination data store, such as a database or a data warehouse.

ETL is a process that helps organizations effectively manage their data. It involves extracting data from multiple data sources, transforming it into a consistent format, and loading it into a data warehouse or other target system. This process can help organizations improve their data quality and better understand their data.

What are the 3 layers in ETL

ETL is a process that involves extracting data from a source system, transforming it to meet the requirements of the target system, and loading it into the target system. This process is typically used to migrate data from one system to another, or to update data in a target system.

An ETL system is responsible for extracting data from a variety of sources, transforming it into a consistent format, and then loading it into a target data store. The key components of an ETL system can be categorized into an extract, transformation, and load.

The extract component is responsible for connecting to the various data sources and extracting the data. The data sources can be in a variety of formats and can be located both on-premises and in the cloud.

The transformation component is responsible for taking the extracted data and converting it into the desired format. This may involve cleansing the data, performing calculations, and/or merging data from multiple sources.

The load component is responsible for loading the transformed data into the target data store. The target data store can be a relational database, a data warehouse, or a NoSQL database.

Although there can be other subparts within the ETL system, for this article, we will consider these three.

Is SQL an ETL tool?

In the first stage of the ETL workflow, extraction often entails database management systems, metric sources, and even simple storage means like spreadsheets. SQL commands can also facilitate this part of ETL as they fetch data from different tables or even separate databases. This stage of the workflow is important in order to get the raw data that will be transformed in the next stage.

SQL and ETL are two concepts that have been used for many years to manage data. SQL stands for Structured Query Language and is a programming language that allows you to query relational databases. You can use it to retrieve and manipulate data from a database. ETL stands for Extract, Transform, and Load.

What are the five main steps in the ETL process?

The most important part of the ETL process is the extraction, transformation and loading of data. These are the most crucial steps in ensuring the quality of data. The process of cleaning data is also important in order to ensure that the data is free of any errors.

A data warehouse is built using data extractions, data transformations, and data loads. Extractions are used to pull data from sources, transform the data according to BI reporting requirements, and then load the data to a target data warehouse. Data loads are typically performed using an ETL tool.

What is difference between ETL and database

ETL Testing:

ETL testing is a process of testing data in a data warehouse system. This type of testing is necessary to ensure that data is accurately extracted, transformed, and loaded into the data warehouse. In order to perform ETL testing, testers must have a solid understanding of the data warehouse schema and the ETL process.

Database Testing:

Database testing is a process of testing data in a transactional database. This type of testing is necessary to ensure that data is accurately stored and retrieved from the database. In order to perform database testing, testers must have a solid understanding of the database schema and the database query language.

ETL testing is a multi-level, data-centric process. It uses complex SQL queries to access, extract, transform and load millions of records contained in various source systems into a target data warehouse. ETL testing is used to ensure that data is accurately extracted from the source systems, transformed as per the target data warehouse requirements and loaded into the target data warehouse without any data loss.

How many types of ETL tools are there?

Enterprise Software ETL

Enterprise software ETL tools are usually very comprehensive and offer a wide range of features. They are often used by large organizations with complex data needs. Some of the most popular enterprise software ETL tools include IBM DataStage, Oracle Data Integrator, and Microsoft SSIS.

Open Source ETL

Open source ETL tools are typically free and offer a good selection of features. They are often used by small and medium sized organizations. Some of the most popular open source ETL tools include Talend Open Studio, Pentaho Data Integration, and Jitterbit Data Integrator.

Custom ETL

Custom ETL tools are built specifically for a particular organization and its data needs. They offer a high degree of flexibility and can be tailor-made to fit the organization’s specific requirements. Custom ETL tools can be expensive to develop and maintain.

ETL Cloud Services

ETL cloud services are becoming increasingly popular as they offer a pay-as-you-go model that can be very cost effective. They are also very convenient as they can be accessed from anywhere with an internet connection. Some of the most popular ETL cloud services include Amazon Elastic MapReduce, Google BigQuery

An ETL pipeline is a set of processes to extract data from one system, transform it, and load it into a target repository. ETL is an acronym for “Extract, Transform, and Load” and describes the three stages of the process.

The Extract stage typically involves retrieving data from one or more sources. The Transform stage involves converting the data into a format that is suitable for the target repository. The Load stage involves loading the data into the target repository.

ETL pipelines can be used to migrate data from one repository to another, or to perform data transformations such as aggregations, cleansing, and warehousing.

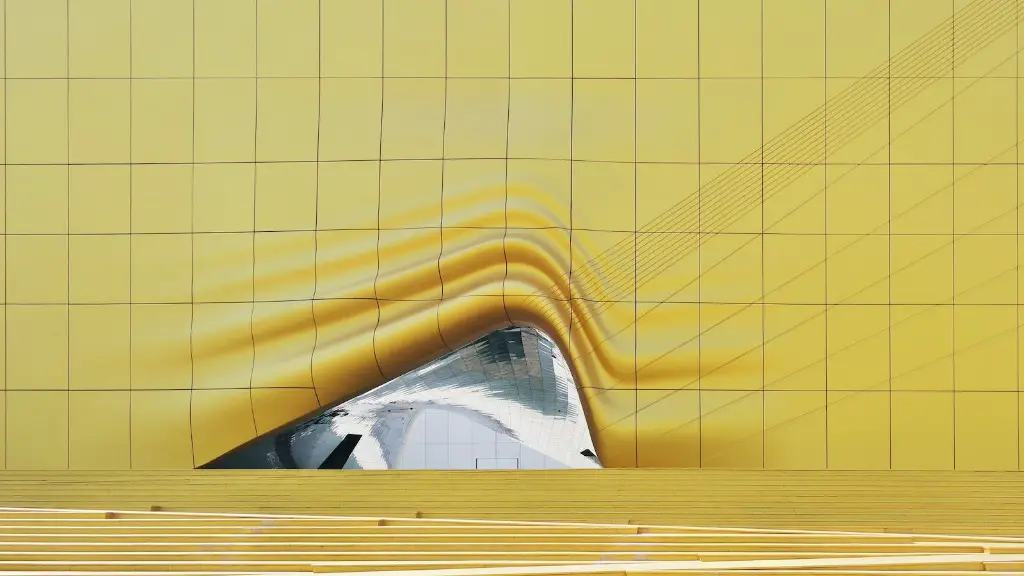

What are the 5 elements of architecture

Sustainable architectural design takes into consideration the environmental impact of the materials used in construction as well as the building’s energy efficiency. A well-designed home needs to be functional and liveable, while also being beautiful.

Data warehouses and data lakes are increasingly becoming more popular as a way to store and analyze data. An ETL tool can help to set the stage for long-term analysis and usage of such data. Examples of data that can be stored in a data warehouse or data lake include banking data, insurance claims, and retail sales history.

What are the ETL layers?

ETL stands for “extract, transform, load.” These are the three processes that, in combination, move data from one database, multiple databases, or other sources to a unified repository—typically a data warehouse.

The extract process involves extracting data from various sources. The transform process involves transforming the data into a format that can be loaded into the target repository. The load process involves loading the data into the target repository.

ETL is a powerful tool for moving data from disparate sources into a unified repository. It enables organizations to make better decisions by providing access to data that would otherwise be siloed.

There are many popular scripting languages for ETL, but the most common are Bash, Python, and Perl. Developers with a software engineering background tend to have strong expertise in programming languages like C++ and Java, which are also very common in ETL.

Which programming language is best for ETL

Python is a powerful language for building ETL pipelines. There are many great Python frameworks that can help you create successful ETL pipelines.bubbles, Bonobo, pygrametl, and Mara are some of the top ETL Python frameworks. Each of these frameworks has its own strengths and weaknesses, so be sure to choose the one that best suits your needs.

As we all know, data is becoming increasingly complex and varied. There are more sources of data than ever before and more ways to store and process it. This makes Extract, Transform and Load (ETL) tools more important than ever.

There are many different ETL tools available, each with its own strengths and weaknesses. In this article, we will take a look at 15 of the best ETL tools currently available.

1) Hevo

Hevo is a cloud-based ETL tool that offers a simple, no-code interface. It is easy to use and can save you a lot of time and effort.

2) Integrateio

Integrateio is a powerful ETL tool that can handle complex data. It offers a wide range of features and is very flexible.

3) Skyvia

Skyvia is a cloud-based ETL tool that is easy to use and offers a wide range of features. It is also very affordable.

4) Altova MapForce

Altova MapForce is a powerful ETL tool that offers a wide range of features. It is also very easy to use.

5) IRI Voracity

IRI Voracity

Warp Up

ETL architecture refers to the process of Extracting, Transforming and Loading data from a variety of sources into a central location or data warehouse.

In ETL architecture, data is first extracted from various source systems. This data is then transformed into a common format that can be loaded into a target data warehouse. The transformed data is then loaded into the data warehouse for reporting and analysis. This architecture is designed to provide a single, centralized view of data from multiple source systems.