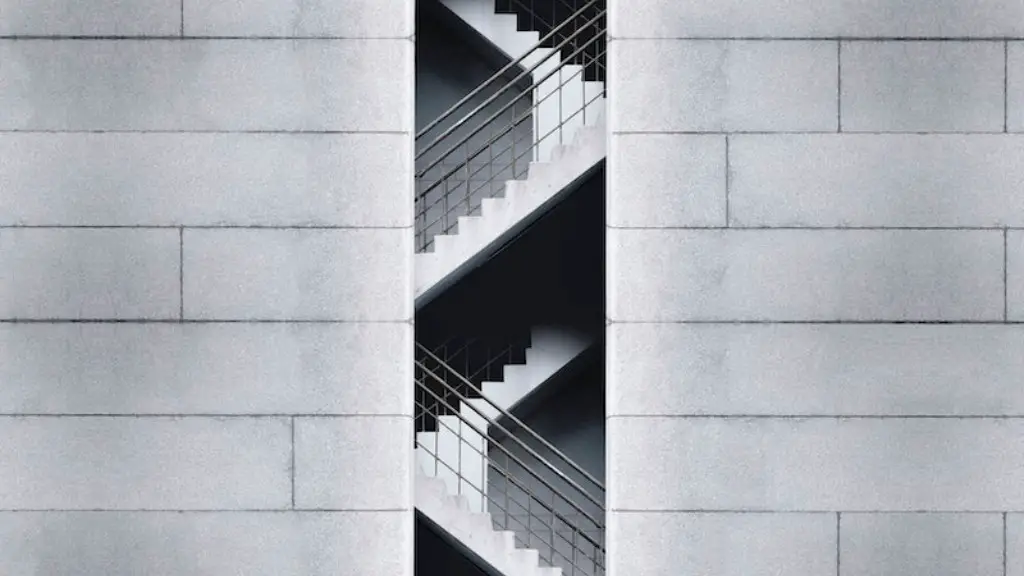

Pipeline architecture is a type of computer architecture where processing units are divided into a series of stages. Each stage performs a specific task and passes its output to the next stage. This type of architecture is most commonly used in CPUs and GPUs.

Pipeline architecture is a type of computer architecture where processing units are divided into a series of interconnected stages. This architecture is used in order to improve the performance of the system by allowing multiple instructions to be processed simultaneously.

What is meant by pipeline architecture?

Pipelining is an efficient technique for breaking down a sequential process into various sub-operations and executing each sub-operation in parallel with all other segments. This technique is often used in computer architecture, data processing, and other applications where it is necessary to process large amounts of data quickly.

Pipeline system is an efficient way to manage the manufacturing process as it reduces the need for manual labor and ensures that each task is carried out in a consistent manner. In addition, it also reduces the chances of errors and increases the overall efficiency of the manufacturing process.

What is an example of pipelining

Pipelining is a commonly used concept in everyday life. For example, in the assembly line of a car factory, each specific task—such as installing the engine, installing the hood, and installing the wheels—is often done by a separate work station. The stations carry out their tasks in parallel, each on a different car.

Pipelining is a technique used in computer architecture whereby multiple instructions are processed at the same time. This can improve the instruction throughput, as multiple instructions can be processed together, and thereby reduce the delay between completed instructions.

What is the basic concept of pipelining?

Pipelining is a process of storing and prioritizing computer instructions that the processor executes. The pipeline is a “logical pipeline” that lets the processor perform an instruction in multiple steps. The processing happens in a continuous, orderly, somewhat overlapped manner.

Pipelining is used in order to improve performance of the processor by reducing the time taken to execute an instruction. By breaking down the instruction into smaller steps, the processor can work on multiple instructions at the same time, thereby increasing the throughput.

There are various types of pipelines, such as instruction pipelines, data pipelines, and memory pipelines. Each type of pipeline has its own set of benefits and drawbacks.

Instruction pipelines are the most common type of pipeline. They are used in order to improve the performance of the processor by reducing the time taken to execute an instruction. Data pipelines are used in order to improve the performance of the processor by reducing the time taken to access data. Memory pipelines are used in order to improve the performance of the processor by reducing the time taken to access memory.

Pipelining is a technique that is used in order to improve the performance of the processor. By breaking down the instruction into smaller steps, the processor can work on multiple instructions at

The classic RISC pipeline has five stages: instruction fetch, instruction decode, execute, memory access, and writeback. This simple pipeline is able to execute most RISC instructions in a single clock cycle. However, more complex instructions may take multiple clock cycles to execute.

What is pipeline and why is it important?

Pipelines are a vital part of the transportation infrastructure in the United States. They are used to transport raw materials from production areas to refineries and chemical plants, and then to move the finished products to gasoline terminals, natural gas power plants, and other end users.

Pipelines are safe, efficient, and cost-effective, and they play a crucial role in the economy. In fact, the United States would not be able to function without them.

Oil pipelines are made from steel or plastic tubes which are usually buried. The oil is moved through the pipelines by pump stations along the pipeline. Natural gas (and similar gaseous fuels) are pressurized into liquids known as Natural Gas Liquids (NGLs). Natural gas pipelines are constructed of carbon steel.

How do you design data pipeline architecture

Data pipelines are a necessary part of any data-driven operation, but they can be difficult to design and build. By following these eight steps, you can create a effective data pipeline that will help get your data where it needs to go.

Step 1: Determine the goal

The first step is to determine the goal of the data pipeline. What is the end goal of the data? Is it being collected for analysis, reporting, or operational purposes? Once the goal is determined, you can start to map out the steps needed to get the data from its source to its destination.

Step 2: Choose the data sources

There are many possible data sources, so it’s important to choose the ones that will best help you achieve your goal. One way to narrow down the options is to consider what data is already available and what data would need to be collected.

Step 3: Determine the data ingestion strategy

The data ingestion strategy will determine how the data is collected from the data sources. There are many possible options, such as using an API, web scraping, or batch jobs. The ingestion strategy should be chosen based on the data sources and the goal of the data pipeline.

Step 4: Design the data

The “pipeline” analogy is often used to describe how information flows through a system. It is often used in computer science to describe how data is processed through a series of steps, each of which transforms the data in some way.

What is a pipeline in DevOps?

A DevOps pipeline is a great way to improve the efficiency of your development and operations teams. By automating the process of code deployment, you can eliminate the need for manual intervention, which can save time and money. In addition, by using a pipeline, you can ensure that your code is tested and deployed in a consistent and reproducible manner.

Pipelining is a technique used in computer architecture to improve performance by dividing work among multiple processors. It is typically used in processors with multiple cores, but can also be used in processors with a single core. By dividing work among multiple processors, each processor can work on a different part of the instruction at the same time. This allows for more work to be done in a given period of time, and can improve performance by making better use of the processor’s resources.

What is the difference between pipeline and non pipeline architecture

Pipelining is a technique used in computer architecture whereby multiple instructions are overlapped during execution. This means that while one instruction is being executed, the next instruction is being decoded, and the next one is being fetched from memory. Pipelining therefore allows for a greater degree of parallelism and can therefore lead to a faster overall execution time.

Non-pipelining is a technique whereby the various steps involved in executing an instruction are merged into a single unit or a single step. This means that all of the decoding, fetching, execution and writing to memory is done in one go, rather than being overlapped as in pipelining. Non-pipelining can lead to a more efficient use of the processor, but can also lead to longer overall execution times.

Pipelines are an efficient way to transport liquids and gases over long distances. They can be laid through difficult terrains and under water, and involve very low energy consumption. Pipeline systems need very little maintenance and are safe, accident-free and environmentally friendly.

What is the difference between pipeline architecture and parallel architecture?

Pipelining is a great way to improve the performance of a system by breaking down a task into smaller parts that can be executed simultaneously. This is especially useful for tasks that are independent of each other. Parallel processing takes this a step further by using duplicate hardware to execute the task, which can provide an even greater performance boost.

A pipeline is a system for transporting fluids or gases over long distances. It typically consists of a network of pipes, fittings, pumps, and other infrastructure. pipelines are used to transport a variety of materials, including oil, natural gas, and water.

Conclusion

Pipeline architecture is a approach to computer architecture in which the CPU is divided into a series of discrete units, each of which performs a specific task. The individual units are interconnected and work together to carry out the instructions of a program.

After doing some research, it seems that pipeline architecture is a term that is used to describe a type of computer architecture that is designed to allow for high performance by making use of pipelines. This type of architecture is often used in CPUs and other types of hardware that need to be able to handle large amounts of data quickly.